All You Need Is A Reference

Cross-modality Referring Segmentation for Abdominal MRI

The dataset, code, and trained model weights will be publicly available after anonymous peer review.

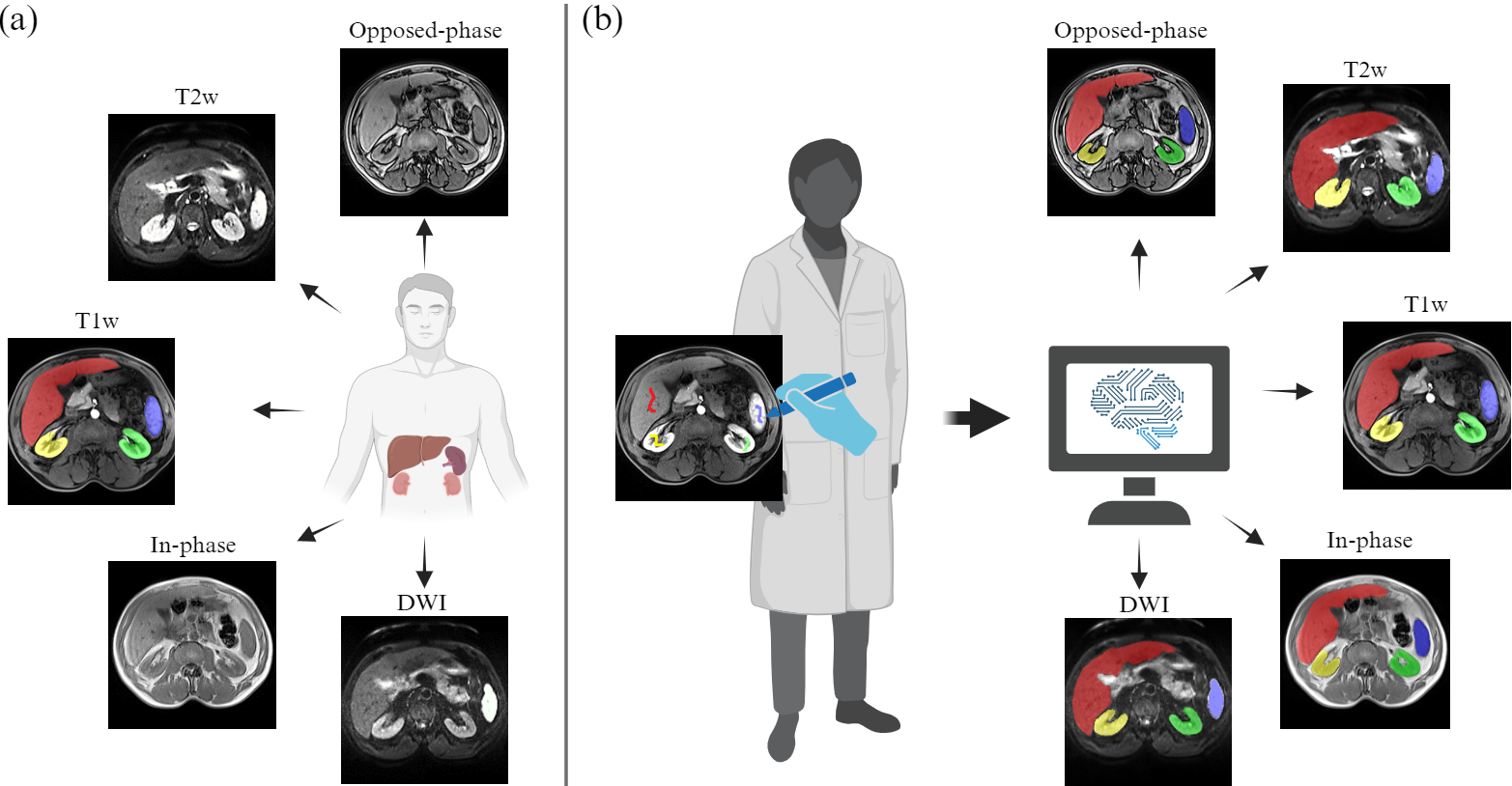

Task Description

Task definition of referring segmentation in multi-modality MRI images.

- Patients undergo five modalities, including one reference modality T1w and four target modalities: T2w, DWI, In-phase, and Opposed-phase. The task is to generate segmentation masks for all the target modalities based on one reference modality.

- Clinical application scenarios: Users first draw scribbles on the reference modality. Then, the model simultaneously outputs segmentation masks for the other MR modalities corresponding to the scribbled organs.

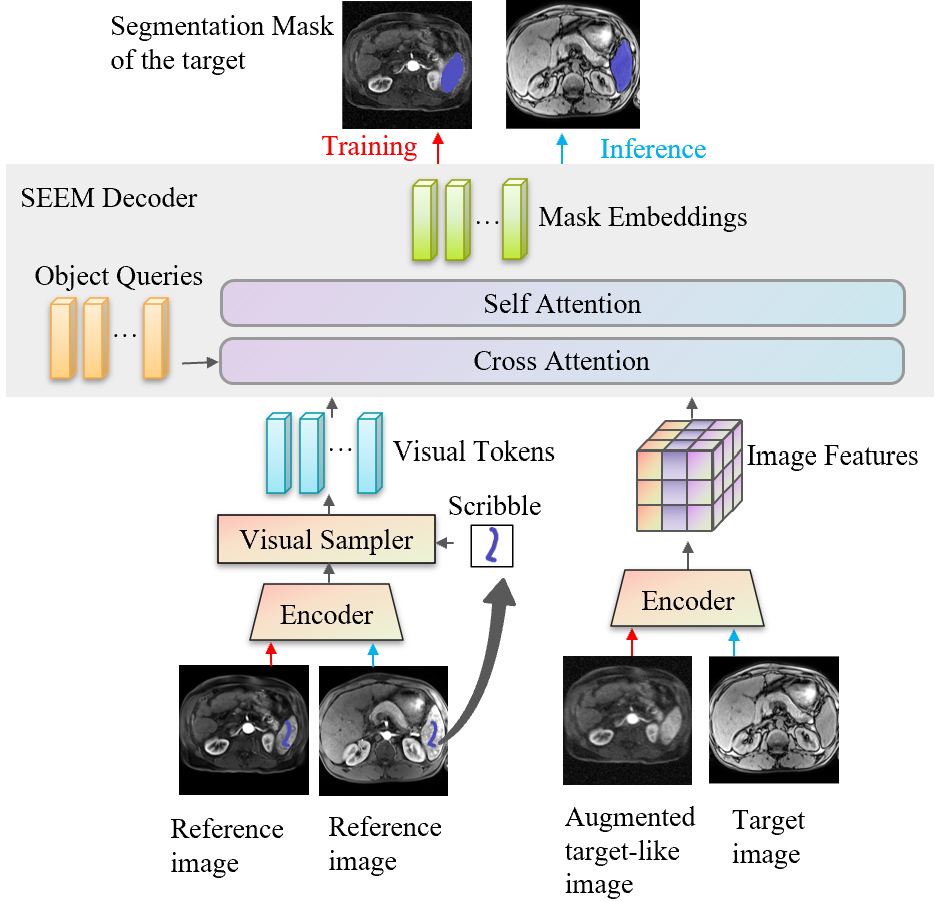

Network

CrossMR Architecture. The scribble prompt along with the reference image are used to produce image features corresponding to the prompted region to form visual tokens. Learnable object queries and visual tokens cross-attend to the target image features and self-attend within themselves. The object queries are linearly mapped to mask embeddings and class embeddings, which are used to output the segmentation mask and the text label, respectively.

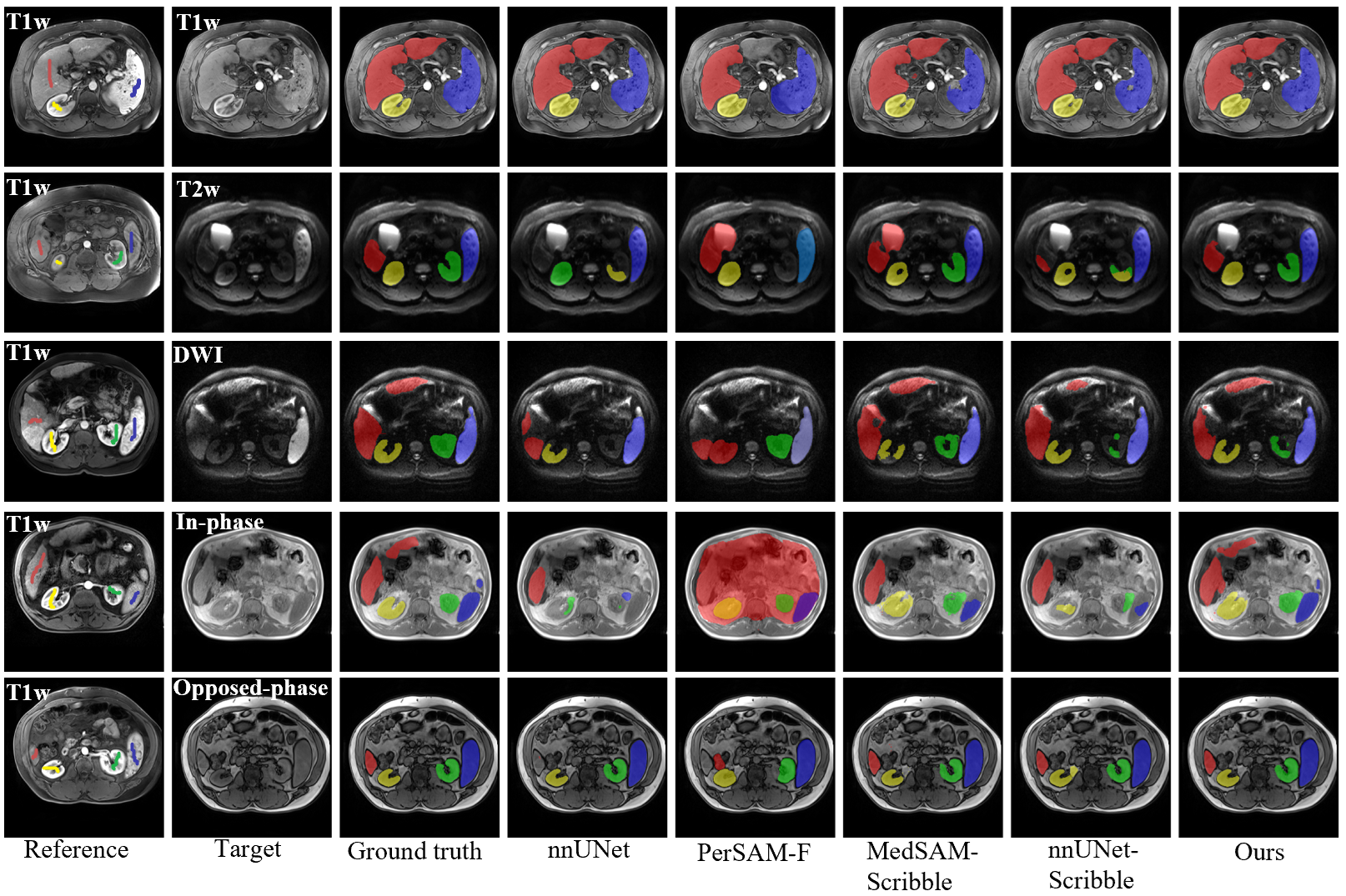

Results

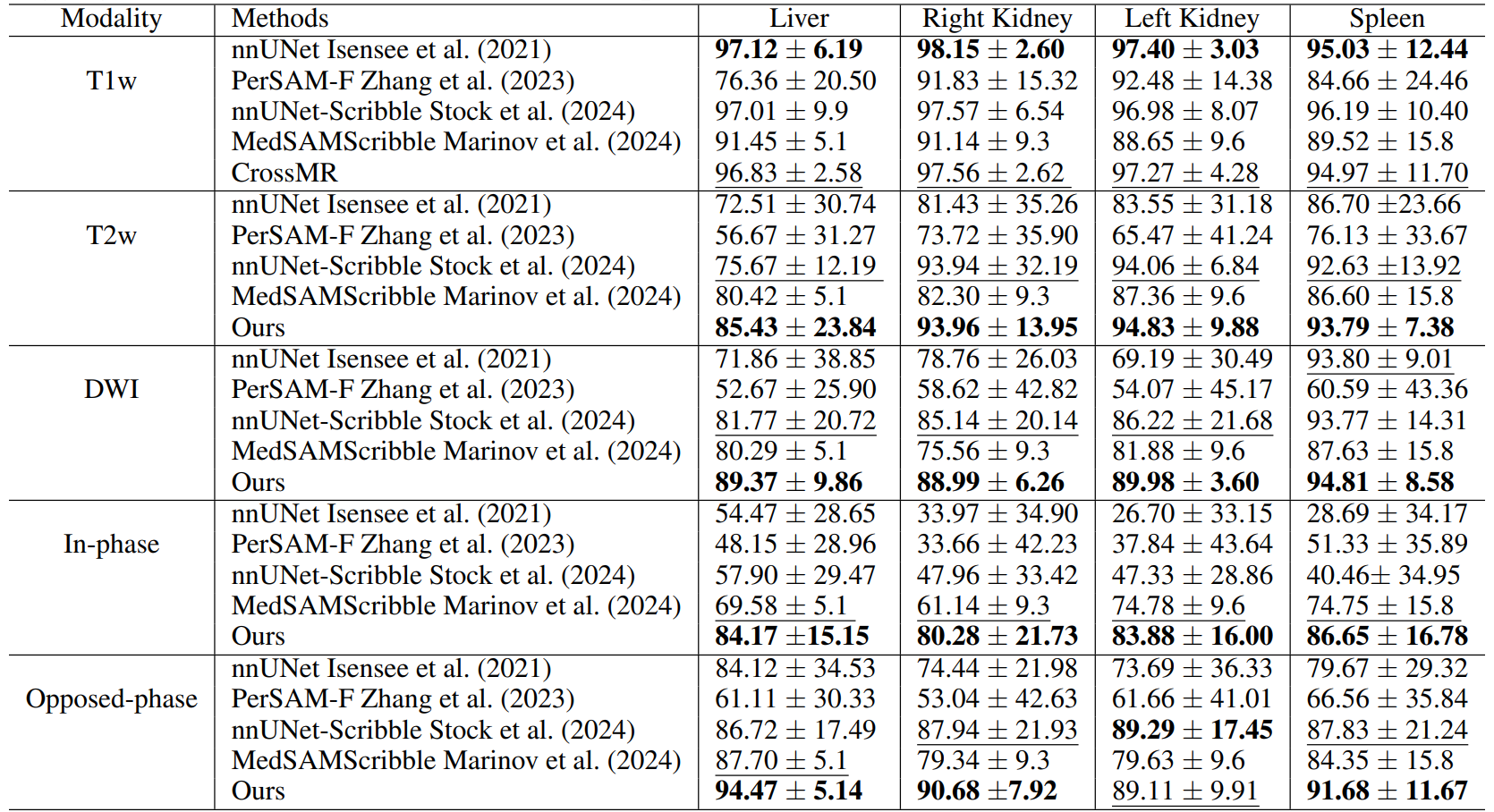

Table 1. Quantitative segmentation results. Organ-wise DSC scores of the baseline model and proposed method across one in-distribution modality (T1w) and four out-of-distribution modalities (T2w, DWI, In-phase, and Opposed-phase).